The issue

I have been looking at version numbering for a project where the developers had stuck at 0.0.1-SNAPSHOT for 12 months and were starting to encounter issues with getting the correct JARS for their projects from their binary repository.

The solution was to use the features that are present in Maven and Jenkins to assist them in their processes.

I covered the basics of version numbering in an other post (

May 2012), but that just gives the version strategy for Apache projects. Since then I have come across

Semantic Versioning which is a great a almost definitive source on how you should work with versions.

However this does not cater for the way Maven actually treats version numbers, it is just compatible with it..

A good version number has a number of properties:

- Natural order: it should be possible to determine at a glance between two versions which one is newer

- Maven support: Maven should be able to deal with the format of the version number to enforce the natural order

- Machine incrementable: so you don't have to specify it explicitly every time

What does Maven do?

For reference, Maven version numbers are comprised as follows:

<MajorVersion>.<MinorVersion>.<IncrementalVersion>-<BuildNumber | Qualifier>. Where

MajorVersion,

MinorVersion,

IncrementalVersion and

BuildNumber are all numeric and

Qualifier is a string. If your version number does not match this format, then the entire version number is treated as being the

Qualifier. [

See]

If all the version numbers are equal, the qualifier is compared alphabetically.

"RC1" and

"SNAPSHOT" and sorted no differently to

"a" and

"b". As a result,

"SNAPSHOT" is considered newer because it is greater alphabetically. See

this page as a reference.

The issue on may projects is how to manage the versions and how to do so with out breaking the Maven format which will cause Maven to treat your versions as just the Qualifier which is a string (not good). Note that

a.b.c-RC1-SNAPSHOT would be considered older than

a.b.c-RC1, because of text comparisons.

What to use as an Incremental version number?

I think it is reasonably straight forwards for a project to determine the major and minor version as they often come directly from the business drivers for the project.

The Incremental version can give some issues as the business may not be interested and it is the developers that need it for tracking purposes.

Therefore the incremental number has be be meaningful to them.

So usefull numbers could be the database schema version, the sprint number the feature set that is being implemented (although this can be hard if you have several teals working in parallel).

The simplest way is to start at

1 and for the team leads to determine when to increase the number.

Whether to use as a Qualifier or a BuildNumber?

There appear to be several schools of thought on this and Maven simply fits with them all.

The Apache method is to not have either and using the

SNAPSHOT qualifier allows you to follow this pattern.

However,

SNAPSHOT does not allow your developers to know what to use the version for.

JBoss has qualifiers (alpha[n], beta[n], release candidate 'CR[n]' and Final) with optional numbers. [

See].

The

OSGI specification adds a further complication as does the

eclipse numbering which are

MajorVersion,

MinorVersion,

IncrementalVersion.TIMESTAMP[-Mn]

MajorVersion,

MinorVersion,

IncrementalVersion.CR[n]

MajorVersion,

MinorVersion,

IncrementalVersion.Final

There are only two qualifiers. The first one is for the milestone releases, and the qualifier starts with a numeric timestamp. The project can use a timestamp as shown below as it will sort according to the compareTo method of the String class just like any other qualifier. ie YYYYMMDD

Optionally, if for some reason there is a need to make two releases in the same day, you can add a sequence number to the end of the timestamp. The next part of the qualifier is the milestone number, where

M stands for milestone, and

n is the milestone number.

After all the milestone releases that have added the various functional pieces are complete, and the project and any sub-projects that are integrated are at least at a candidate release stage, then a CR release will follow. Just like in the traditional model, there may be multiple CR releases depending on the feedback from the community.

It is the above approach minus the milestone number I would advise (assuming the '

IncrementalVersion' is controlled by the development team.

Setting up Maven

Making sure your version numbers are incremented can be a pain in the arse but there is a plugin for Maven that helps you mange this.

The Maven 'Release' Plugin

The Release plugin [

See] is helpful but not essential. It is used to help a developer release a project with Maven, saving a lot of repetitive, manual work. Its best usage is to allow the developer to update their version number with out effort and correctly.

It is added to the maven project as follows:

<project>

...

<build>

<plugins>

...

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-release-plugin</artifactId>

<version>2.5.2</version>

</plugin>

...

</plugins>

...

</build>

...

</project>

easy!

This allows the developer to issue a command as follows:

mvn -B release:update-versions

... and the version will be updated to the next increment. They then only need to commit it as part of their code.

It is always the last part of the version number that is incremented and it even works if you have a text qualifier such as

CRn (see above).

Maven Versions Plugin

This plugin is much more useful when it comes to controlling your release via your CI server. [

See]

(In these examples I'm going to quote Jenkins but this process should work for others.)

(I am also not sure if Maven 3.1+ doesn't include this plugin.)

Unlike the previous plugin that increments the version, this will allow a specific version number to be set in the POM, like this:

mvn versions:set -DnewVersion=0.1.1-RC1

Where '

0.1.1-RC1' is an example of a version number.

The process

Now to tie this together.

The process we want is:

- Jenkins checks out the latest revision from SCM (Subversion, Mercurial, Git, ...)

- Release Plugin transforms the POMs with the new version number

- Maven compiles the sources and runs the tests

- Release Plugin commits the new POMs into SCM

- Maven publishes the binaries into the Artifact Repository

Prerequisites: Jenkins with a JDK and Maven configured, and both the

Git and the Workspace Cleanup, and

Parameterized Trigger Plugin plugins installed.

We're going to start by creating a new Maven job and making sure we have a fresh workspace for every build:

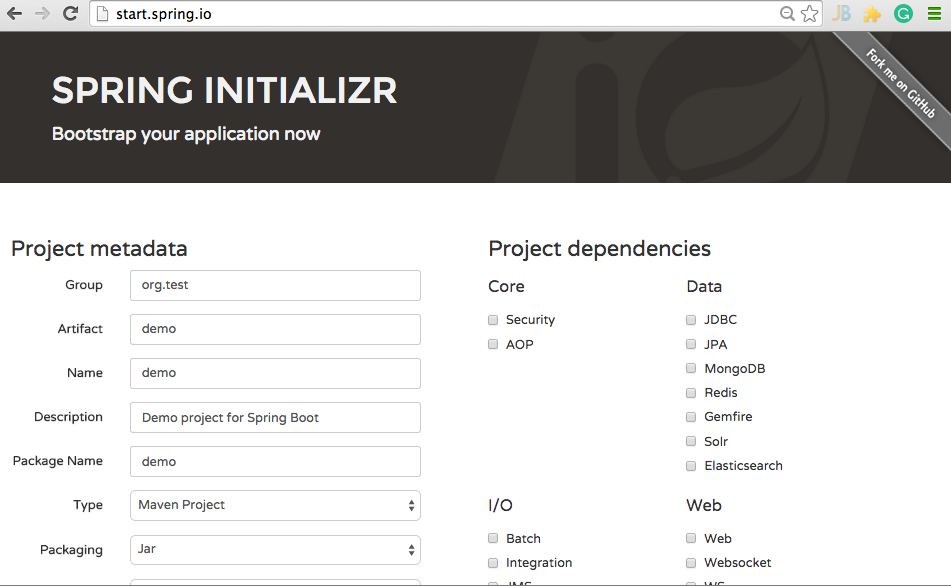

After assigning you SCM, the next step is to set the version upon checkout.

A good version number is both unique and chronological. We're going to use the Jenkins BUILD_NUMBER (the current build number, such as "153") as it fulfills both these criteria wonderfully.

We could use

BUILD_ID which is such as "2005-08-22_23-59-59" (YYYY-MM-DD_hh-mm-ss).

or even the Git commit number using

GIT_REVISION.

This is configured as follows:

and in the build step:

And that's it! Every time this job is run, a new release is produced, the artifacts will be deployed and the source code will be tagged. The version of the release will be the BUILD_NUMBER (or GIT_REVISION) of the Jenkins project. Nice and simple.